Idea In Brief

Governments face challenges in understanding AI's implications

They must navigate rapid developments and varied use cases while aiming to enhance productivity and manage risks.

A structured approach can help address AI-related questions

This involves creating a strategic framework for policy makers to think about AI from a whole-of-economy perspective.

Effective governance is crucial for AI integration

It requires dynamic feedback loops and clearly defined responsibilities to adapt to the evolving AI landscape.

All of us are being bombarded with information about how AI is evolving and how it can change our lives. This is exciting, if sometimes overwhelming. For governments there is a particular challenge to understand the implications and opportunities of AI’s rapid development and deployment. This is particularly so given the imperative for Australia to lift its productivity, and the expectations that AI can help us achieve this.

How to confront that challenge? How can governments help turn the promise of AI into a reality? How can they stay ahead of developments, illuminate the path for others, while taking their own steps towards AI-enabled service delivery? How can they proceed with confidence when the pace of change is rapid, the potential use cases so varied, and the processes fraught with risk and uncertainty?

This article outlines an approach to calm the noise, a structured way to respond to these and similar questions. Future pieces will explore how AI can lift productivity and improve outcomes in different sectors, including within government itself. Here we offer a platform for policy makers and others in government to think about AI from a strategic, whole of economy point of view.

Developing a systemic view

The Economic Reform roundtable produced a list of 10 priority actions to unlock productivity. AI featured in two of these: acceleration of work on an AI plan for the Australian Public Service, and development of a broader national AI capability plan. The first speaks to AI-enabled productivity improvements at the individual level. The second is about creating the conditions for businesses, governments and other organisations to leverage AI to expand their output at lower cost.

Achieving the latter requires more than supporting and aggregating enterprise-level efforts to adopt AI. It demands a top-down, systemic view of the conditions that enable safe, responsible and productive AI uptake.

Unsurprisingly, there does not yet appear to be a considered and coherent sense of what this AI-friendly policy, regulatory and operating context needs to look like. It is hard enough to imagine what an AI-driven transformation looks like within our own jobs or organisations, let alone at scale across the whole economy.

This is where government has a role. Not to seek to control or define the future, but to clarify the public value at stake, and the barriers and enablers to achieving an AI-enabled economy.

Adopting a stewardship mindset

What does it mean to ‘foster the conditions’ for the safe and responsible use of AI? At Nous we think of government as a steward of systems. Acting as a system steward means:

- Considering all available levers, rather than defaulting to regulatory or programmatic responses; and

- Connecting the different strands of the government’s efforts to maintain coherence through rapid change and ensure alignment with the overarching objectives and outcome.

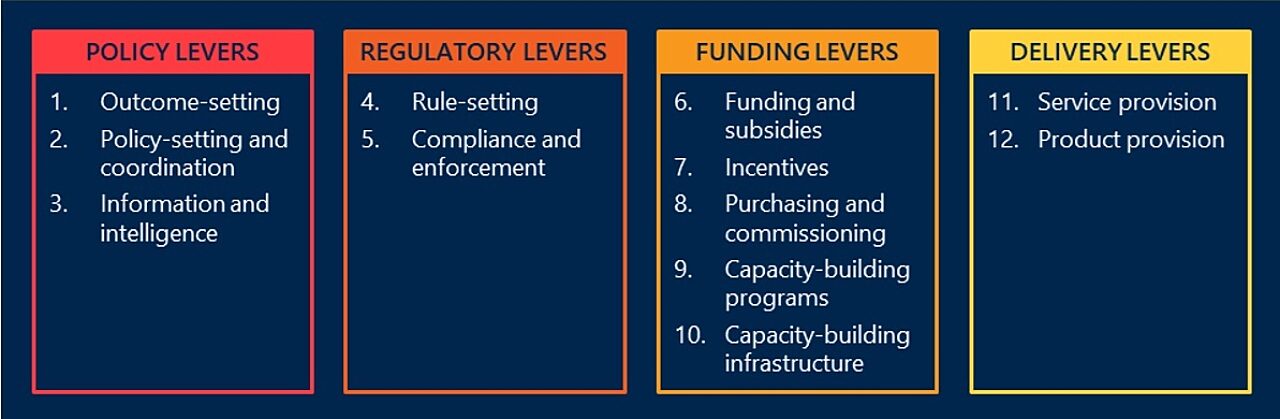

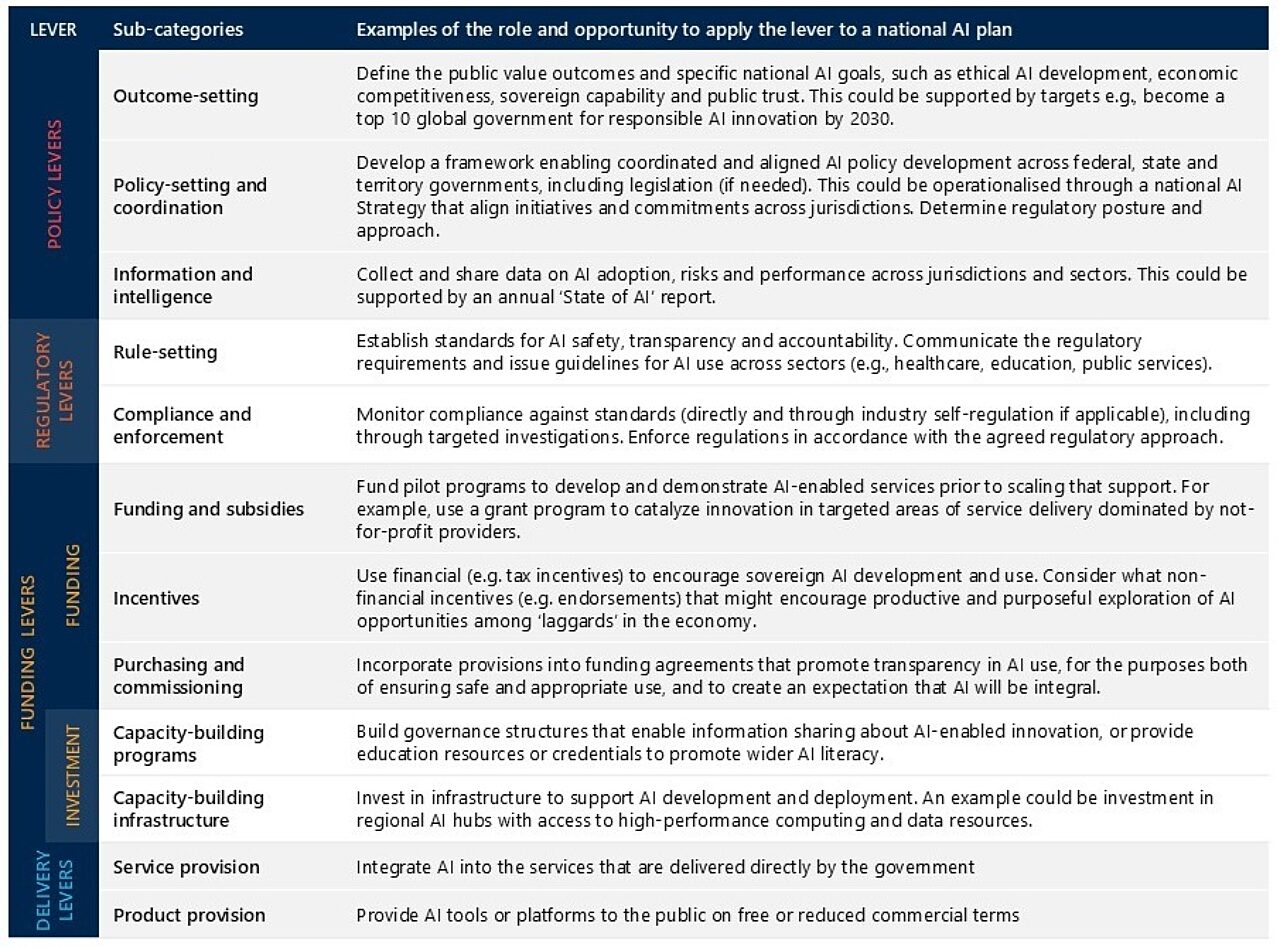

The levers are set out in the figure below, divided into the following categories: policy, regulatory, funding and delivery. These four categories are familiar to public policy makers; the value of the framework comes from considering the levers at the next level down (i.e. the sub-categories).

This framework, which Nous has tested and refined over the past decade, has proven an invaluable reference in both understanding respective roles (including between layers of government) and identifying potential policy options and responses.

Setting the policy frame

As with the development of any new strategy or plan, it is important to start with the end goal in mind. This is reflected in the levers framework, whose first category speaks to the role of government in outcome setting, develop the overarching policy frameworks, and ensuring that there is appropriate information-sharing and data collection to inform monitoring against policy goals, or to inform adjustments to the settings.

Thinking about the productivity challenge, the outcomes might be something like “AI-driven innovation that unlocks productive capacity in the economy, increases efficiency and outputs, and contributes to better outcomes for citizens, consumers and businesses”. (This is something that would need to be developed further and agreed, but is offered as a starting point.)

A policy framework is then needed to capture the principles and parameters for achieving that outcome, and to reflect the key stakeholders and partners who would need to be involved at a governance level. This is essential to providing the certainty that business and investors crave.

The framework might, for example, stipulate that the more specific objectives related to the overall outcome are to: incentivise AI adoption and innovation, ensure appropriate regulation for the safe and responsible development and use of AI, and lead by example.

With that policy framing in place (or while it is being developed), the levers to employ in achieving the desired outcome can then be explored.

Creating the conditions for success

Working methodically through the remaining levers – regulation, funding, delivery – governments can think about the different ways in which they can foster conditions so that productivity-enhancing AI can flourish while downside risks and harms can be mitigated.

What this may mean in practice is set out in below.

The ideas set out here are indicative only, and they imply questions cannot be answered by one person or agency alone. They are offered as a potential basis for structured and complementary conversations that enable development of a coherent approach.

For example, regulators and policy makers can meet to consider the broader implications and opportunities of AI. These discussions can inform how regulation is designed and applied in practice. Those involved in innovation and industry policy can similarly convene to discuss the gaps and barriers that government could address through funding, investment and information levers.

Providing effective governance of a dynamic innovation system

As is often the case, effective governance is a critical success factor to maintaining strategic alignment and coherence. In Nous we interpret ‘governance’ broadly to include, not just the decision-making bodies who ‘own’ the collective work, but the advisory mechanisms, data collection and information sharing processes that ensure that those decisions are evidence-based. The latter is especially important given the rapid evolution of AI and of developments within ‘first mover’ segments of the economy.

A key consideration therefore becomes: “as a system steward, how do we create and sustain a dynamic feedback loop that draws in and channels out information to help government stakeholders stay abreast of risks and opportunities?” Standard processes of monthly meetings with dense papers prepared in advance will not cut it. AI can help but it is more likely that a different model altogether is required.

A similar point arises when we think about the need for clearly defined responsibilities and accountabilities as part of any good governance model. In such a complex area as AI, with action distributed among a multitude of actors, a static view of who is ‘leading’ and who is ‘contributing’ on different initiatives will not be effective. Instead, roles are likely to require continual re-evaluation so that oversight arrangements are fit for the specific objective that is of foremost interest or concern. There cannot be one single controlling authority, though there may be one single coordinating authority. Only dynamic structures will enable real-time collaboration to address regulatory inhibitors and enablers.

The challenges of an AI future may be daunting but they are manageable

The above is deliberately high-level, and seeks to provide a sector-agnostic way ‘in’ to thinking about AI from a policymaker’s perspective. It is a starting point only. More detailed work is required to engage with the specific risks and opportunities associated with the services and sectors for which you are responsible. This is something we will explore in future articles.

So much that is associated with AI feels like a step into the unknown, but with the right anchoring objectives and frameworks to work through, and with the right mindsets to explore new ways of working, the benefits for citizens, organisations and the economy at large can be profound.

Get in touch to discuss how we can help you can adopt frameworks that will help you navigate the risks and opportunities of AI.

Connect with Tanya Smith, Will Prothero, and Monique Jackson on LinkedIn.